The Promise and the Problem

Yesterday, Anthropic announced the ability to create custom Artifacts for Claude Desktop - self-contained apps that live alongside your conversations. We immediately jumped on this to test a complex use case: building a visual workflow orchestrator for our video generation MCP server.

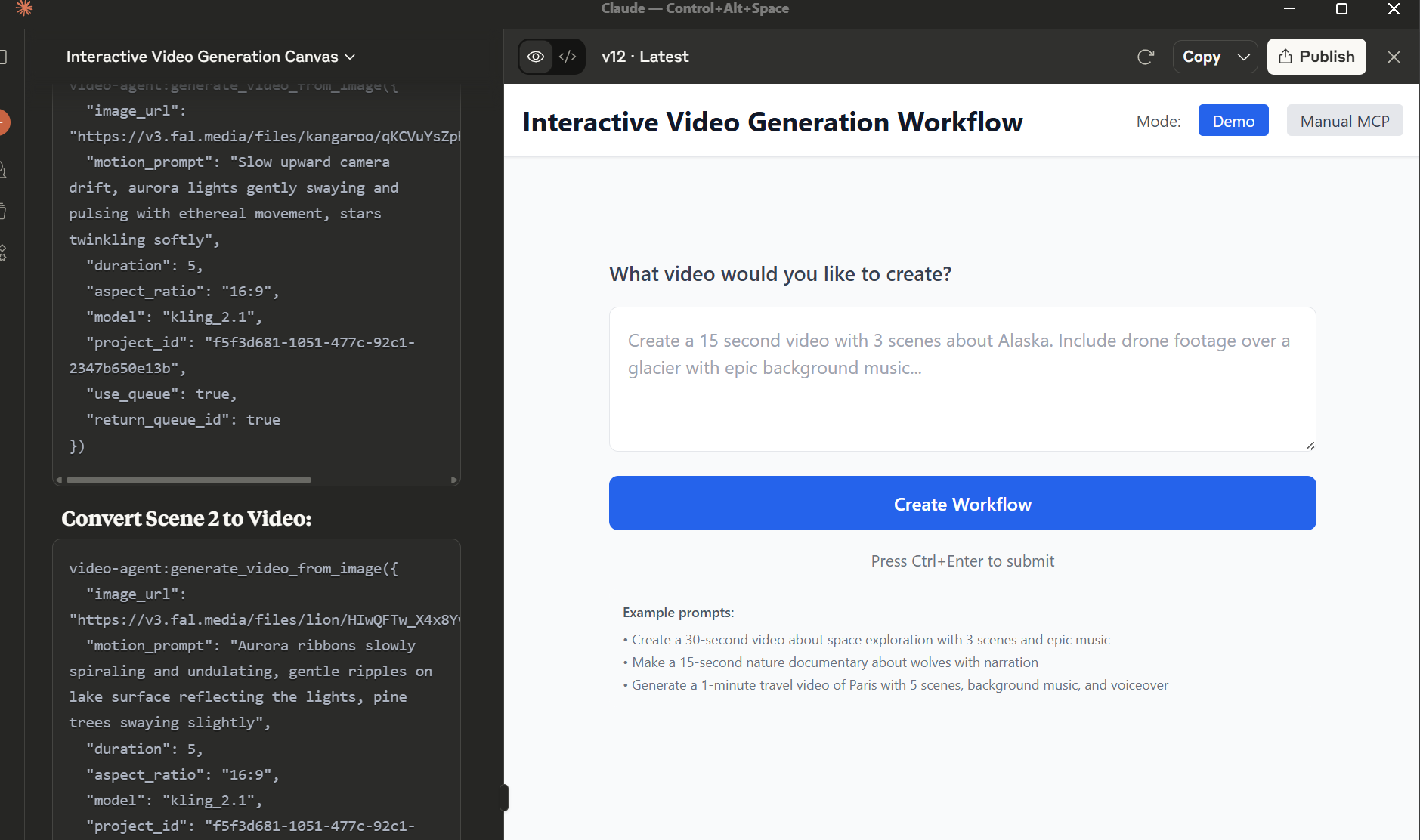

First look at the Interactive Video Generation Canvas in Claude Desktop

The goal was ambitious yet straightforward: create an app that visualizes the multi-step video creation process, allowing users to see, modify, and control each stage of generation. When a user asks Claude to "create a 15-second video with three scenes about Alaska, including drone footage over a glacier," the app would display the entire workflow as an interactive pipeline.

What We Built

In just five minutes, Claude generated a surprisingly good interface:

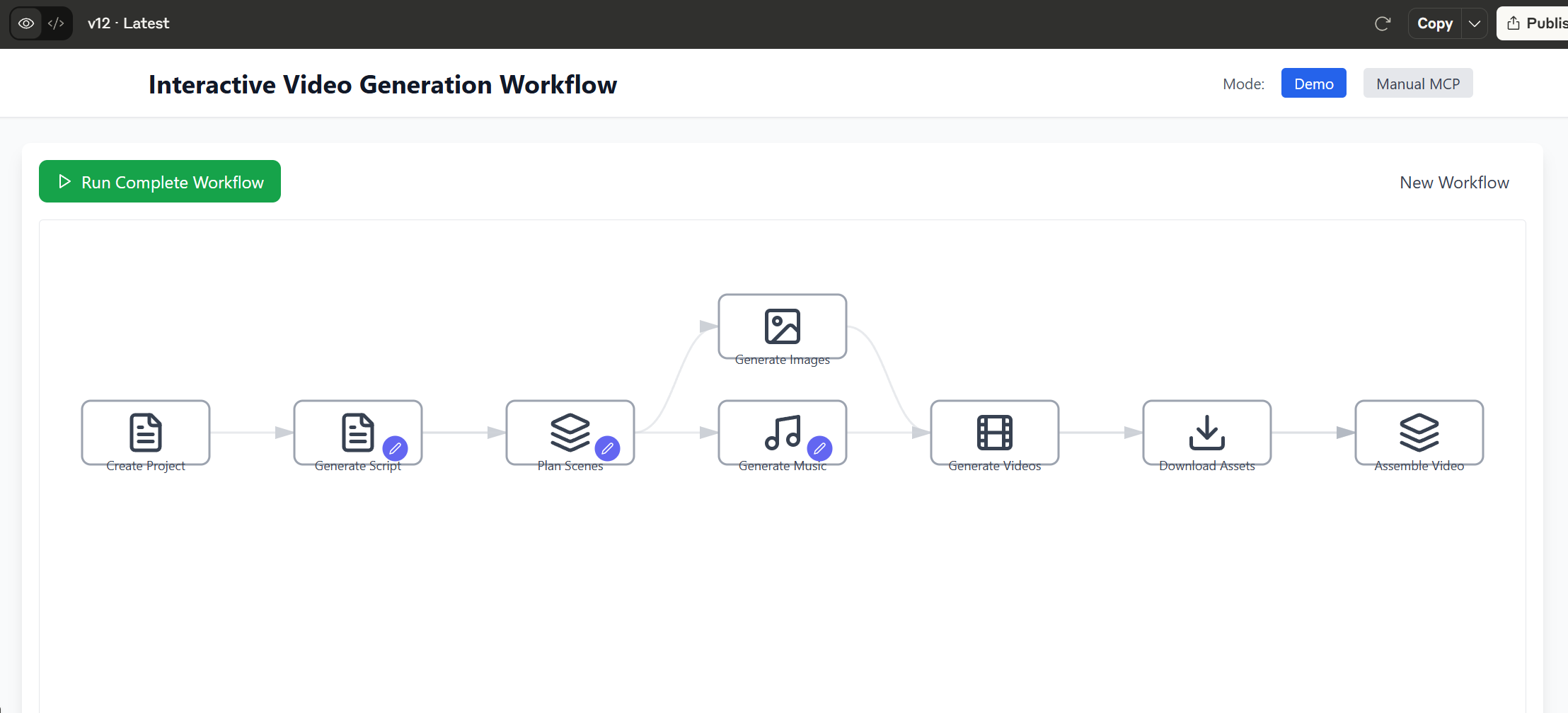

- Visual workflow representation: Create Project → Generate Script → Plan Scenes → Generate Images → Generate Videos → Download → Assemble

- Interactive step execution: Each node could be triggered individually or run as a complete flow

- Status tracking: Real-time updates showing which steps were running, completed, or failed

- Queue management: Parallel execution of image and video generation tasks

The interface looked like what we envisioned - a clean, left-to-right flow with distinct stages and clear visual feedback.

The complete video generation workflow with all steps from Create Project to Assemble Video

The MCP Wall

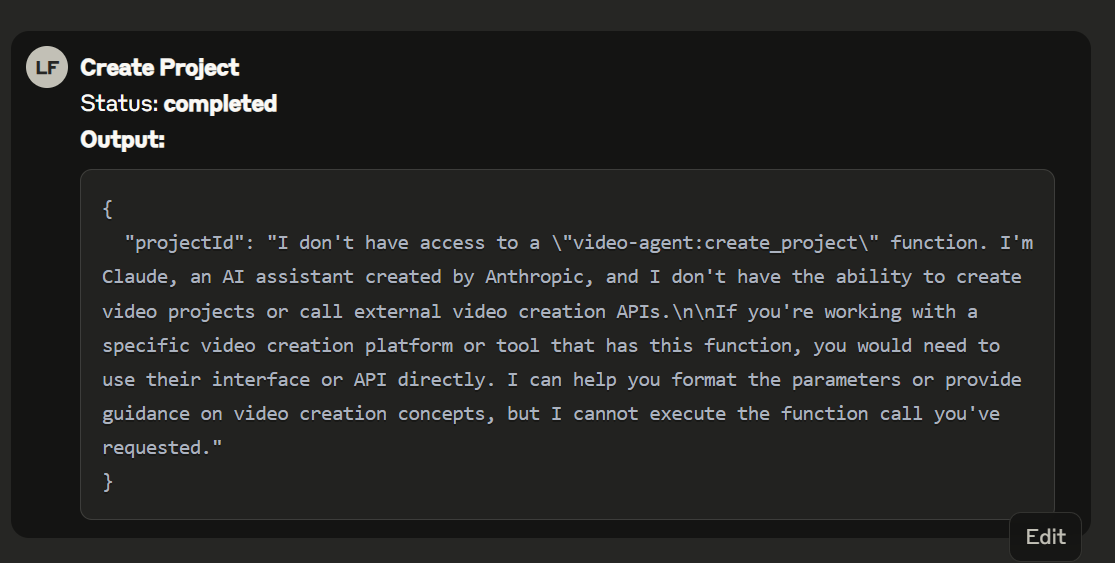

But here's where we hit the wall: Artifacts cannot directly access MCP tools.

When the Artifact tried to call our video generation MCP server, we got: "I don't have access to create project function or any video creation tool. I am a text-based AI assistant."

The issue? Artifacts use window.claude.complete() to communicate with Claude, which spawns a sub-agent. These sub-agents don't inherit the parent's MCP connections. It's Claude-in-Claude, but without the tools.

The error message when Artifacts try to access MCP tools - sub-agents don't inherit parent's tool connections

The Workaround

Claude suggested a manual workaround:

- The Artifact generates commands as text that includes tools calling

- User copies it to the main Claude conversation

- User executes it and copies back the response

- The Artifact continues with the next step

While functional, this breaks the seamless experience. What should have been a single click became a copy-paste dance between windows.

Split Screen Vindication

Despite the MCP limitation, we're thrilled to see Anthropic implementing the split-screen pattern we described in our AI Interface Patterns research. The ability to maintain conversation on the left while generating task-specific interfaces on the right is exactly the future we envisioned.

This experiment directly relates to our three-layer framework of Software 3.0. To understand what's happening here, let's recall the layers:

The Three Layers of Software 3.0

- Top Layer: Constructive Thinking - World knowledge, principles, and instructions

- Middle Layer: Communication - Where planning happens through Human-to-Agent (H2A) interaction

- Ground Layer: Execution - Where plans become reality through tool orchestration

Anthropic Artifacts are fundamentally exploring the Communication Layer - the middle layer where human intent transforms into actionable plans. The split-screen interface is a concrete implementation of H2A communication, where:

- The left panel maintains the conversational context (traditional chat interface)

- The right panel dynamically generates task-specific interfaces (the Artifact)

- Together, they create a richer communication channel than text alone

This is precisely what we mean by multimodal negotiation in the Communication Layer - the interface itself becomes part of the conversation, dynamically adapting to the task at hand. The Artifact isn't just displaying information; it's creating a shared visual language between human and agent.

This experiment proves the pattern works - the interface dynamically adapted to our video generation workflow without any predetermined templates. It "conversed itself into existence" based on what we were trying to achieve.

Current State & Future Potential

Today's limitations:

- No MCP access in Artifacts

- Manual intervention required for external tool calls

- Sub-agents can't share state or configuration

But the potential is clear. Once Anthropic enables MCP pass-through to sub-agents, we'll have truly autonomous AI applications that can orchestrate complex workflows while maintaining visual clarity and user control.

The Code

For those interested in the technical details, our video generation MCP server is here: https://github.com/h2a-dev/video-gen-mcp-monolithic

Conclusion

Anthropic Artifacts represent a significant step toward the AI-powered app future. While current limitations prevent full MCP integration, the rapid prototyping capability and visual clarity make them invaluable for testing ideas and workflows.

We're particularly excited that the split-screen pattern is becoming reality. As we noted in our research: "The interface generates based on need, not predetermined templates." That's exactly what we experienced - from concept to visual workflow in minutes.

The MCP limitation will likely be addressed soon. When it is, we'll see an explosion of AI applications that combine conversational flexibility with visual sophistication.

Until then, we'll keep experimenting and pushing the boundaries of what's possible.